Last night on twitter there was a discussion about RDM vs. VMFS performance. I have always told everybody that RDM did not have an performance advantage over a VMDK file on a VMFS volume. I have refered people to the following document by VMware: Performance Characteristics of VMFS and RDM but I have never done my own testing until now 🙂

My setup is ESXi 4.1 build 260247 with 4gb fibre channel HBA connected to a SUN Storagetek 2540 SAN. I did the test on a 10GB LUN located on fibre channel disks. The test was done with Iometer on a virtual machine running Windows 2003 SP2. I performed all tests on the 10GB LUN as either: Physical RDM, Virtual RDM, Lazy Zeroed VMDK on a VMFS and Eager Zeroed VMDK on a VMFS.

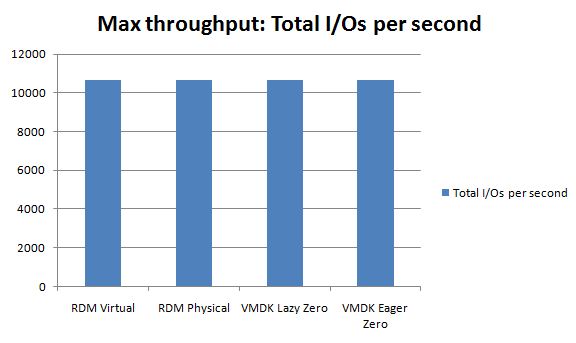

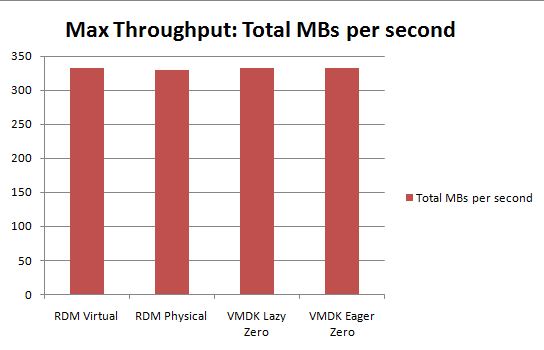

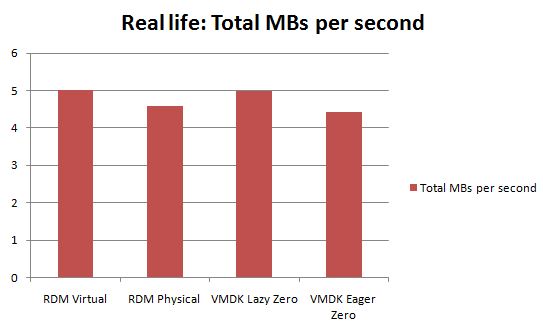

I configured Iometer with settings from the unoffical storage performance thread on VMware communites and ran two tests: Maxthroughput and Real life. Here are my results:

Conclusion: VMFS and RDM have similar performance. Don’t choose RDM for performance.

I looked at this awhile back for a homelab all-in-one box. Using four 1TB 7200RPM SATA II disks with RDM vs VMFS to present to a FreeNAS/OpenFiler VM (tried both to check the differences), I didn’t know much of a difference. The big difference I’ve seen both in the homelab and on iSCSI disk (15k SAS * 90 spindles) is in zeroing the drives. Just do it! Yes it takes time, I think that’s the biggest problem is this concept that is has to be instant, just wait a few minutes for the VMDK to finishing zeroing out first before you complain about VMDK slowness.

ASUN Storagetek 2540 array supports a maximum of 48 drives of eithe SAS or SATA. Assuming 48 15K SAS drives your theoretical IO MAX is right around 10000 and your MB throughput Max should be in the range of 4GB\Sec with 100% sequential read. Based on that, it looks like your test hardware was not able to provide enough IO\Bandwidth to truly determine if there is a performance benefit. I find it surprising that your Real Life MB throughout is around 5MB\Sec. I can get much better than this from a single SATA drive. A breakdown of your IOMETER settings would be helpful to understand these results. Is it a 50/50 split read/write? How random? What size blocks are you using. Are you multi threading your IMOETER with different profiles using several data sets? The thing that does not make any sense is why Virtual RDM outperforms Physical RDM. Do you have a plausable explanation for that result?

Thanks,

Ken

Hi Ken,

Take a look at the official storage thread on the VMware communities. You can find the IOMETER configuration files there. I don’t have 48 drives backing up my LUNS. Only 12 drives!